The world of concept art has undergone a remarkable transformation over the past decade, driven by advances in artificial intelligence. What began as experimental algorithms has evolved into sophisticated systems capable of generating production-ready character designs in seconds. This post explores this fascinating journey and examines how AI has reshaped the landscape of digital concept creation.

The Early Days: Pattern Recognition and Style Transfer

The integration of AI into concept art began around 2014-2015 with relatively simple applications. Early systems primarily relied on neural style transfer algorithms, which could apply the visual characteristics of one image to another. While revolutionary at the time, these systems were limited to stylistic modifications rather than generating original content.

Concept artists experimented with these tools to create mood boards and quick style explorations, but the results required substantial manual refinement. These early AI assistants were curiosities rather than practical production tools.

Early neural style transfer examples from 2015-2016 showing basic pattern application

The GAN Revolution: Creating Something from Nothing

The true revolution began with the development of Generative Adversarial Networks (GANs) around 2017. These systems consisted of two neural networks—a generator and a discriminator—that essentially competed against each other to produce increasingly convincing results. For the first time, AI could generate original images rather than simply modifying existing ones.

Early GANs produced low-resolution, often surreal character concepts. While these images lacked the polish necessary for production use, they provided concept artists with unusual combinations and unexpected design elements that could spark creative thinking. Studios began incorporating these systems into their ideation phases, using AI-generated concepts as starting points rather than finished products.

Diffusion Models: A Quantum Leap Forward

The introduction of diffusion models around 2021 marked another watershed moment in AI-assisted concept art. These models demonstrated unprecedented ability to generate high-fidelity images from text descriptions alone. Suddenly, concept artists could describe a character in natural language and receive a rendered visualization within seconds.

This capability dramatically accelerated the concept exploration phase of character design. Artists could quickly test dozens of variations by adjusting their text prompts, exploring design spaces that might have taken weeks to sketch manually. While the results still required artistic guidance and refinement, the quality gap between AI-generated concepts and human-created art narrowed significantly.

Modern diffusion-based character concepts showing remarkable detail and coherence

The Current Landscape: Specialized Character Generation Systems

Today's AI concept art tools have evolved into specialized systems designed specifically for character generation. These tools incorporate knowledge of anatomy, costume design, material properties, and even narrative archetypes. Rather than generating generic images, they produce purpose-built character concepts with consideration for practical implementation in games, films, or other media.

Features like consistent character generation across multiple poses, controllable style parameters, and integration with 3D pipelines have transformed these systems from curiosities into essential production tools. Art directors can now generate comprehensive character design explorations in hours rather than weeks.

The Artist's Role in an AI-Assisted Future

Despite these advances, the role of the human concept artist remains crucial. AI excels at generating variations within known parameters but struggles with true innovation or understanding contextual requirements that haven't been explicitly encoded.

Today's most effective workflows combine AI generation with human curation, refinement, and contextual intelligence. Artists increasingly function as directors of AI tools rather than executing every brush stroke manually. This shift has expanded what's possible in pre-production, allowing teams to explore design directions more thoroughly than previously possible under typical production constraints.

Looking Forward: Multimodal Systems and Real-Time Collaboration

The next frontier in AI-assisted concept art appears to be multimodal systems that can process and generate across multiple forms of input and output. These systems will understand the relationships between text descriptions, reference images, rough sketches, and even verbal feedback to produce increasingly refined character concepts.

We're also seeing the emergence of collaborative interfaces where multiple artists can work with AI systems in real-time, guiding the generation process through immediate feedback loops. These developments point toward a future where the boundary between human and AI creativity becomes increasingly fluid and synergistic.

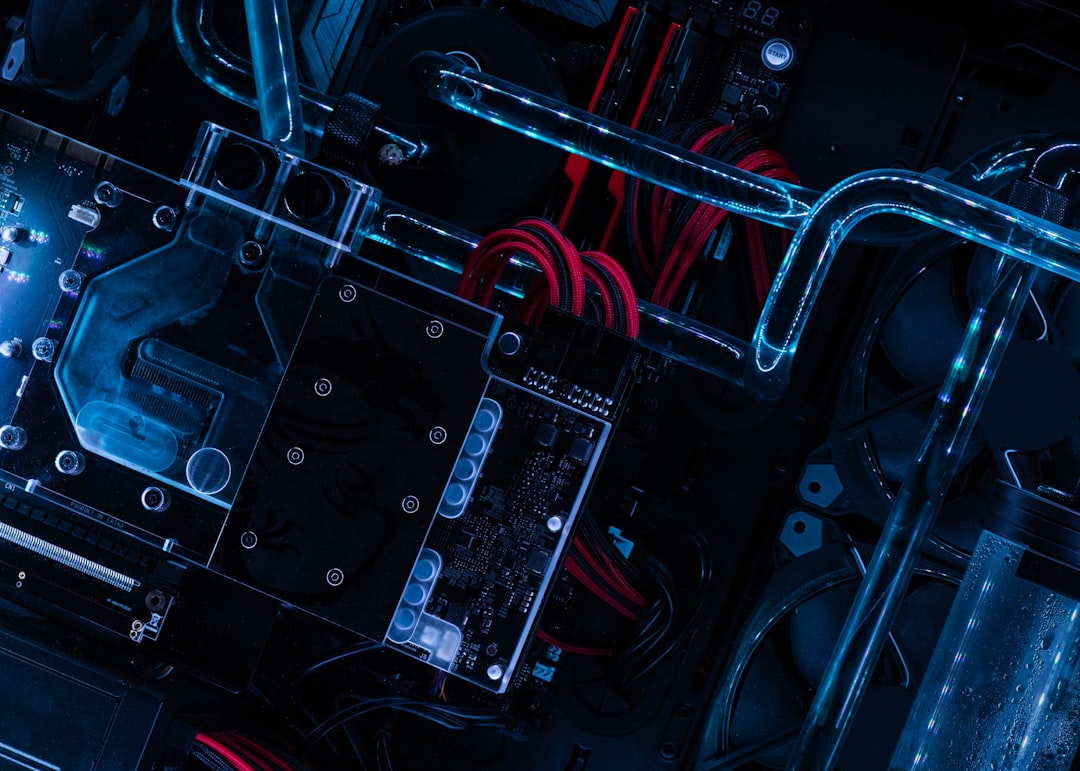

Modern collaborative interface showing multiple artists working with an AI system in real-time

Conclusion: A Transformed Creative Landscape

The evolution of AI in concept art represents one of the most significant shifts in visual creative processes of the past century. From simple style transfer algorithms to sophisticated character generation systems, these tools have fundamentally altered how concept artists approach their craft.

As we look to the future, the most successful concept artists will likely be those who embrace these tools while maintaining the human perspective and contextual understanding that gives art its meaning. The future of concept art isn't about AI replacing artists—it's about a new form of creative partnership that expands what's possible when human imagination meets computational generation.